How I am running my Ghost blog on Azure

I recently had to migrate off of a managed Wordpress blogging platform, after some research I discovered Ghost.

I recently had to migrate off of a managed Wordpress blogging platform, after some research I discovered Ghost. I didn't want to use a shared platform as I wanted more control of my blog, I also didn't want to build from source or spend a large amount of effort on keeping my blog up to date. This is the solution which I came up with and am documenting it so I could replicate it if needed in the future.

Ghost Docker Image

The Ghost team provide a number of official Docker images which can be used to run a Ghost instance. If you dont know about Docker you must be living under a rock :) Docker is one of the technologies which provides various tools which can be used to package and run containerized applications. If you are wondering whether Docker(and Containers) has legs in the Microsoft eco-system - The Windows team has invested significant effort introducing the core constructs needed within their OS to support running containers on the Windows platform.

Windows Server 2016 LTSC at launch also included a license to run an enterprise version of Docker at no additional cost and is this scenario is fully supported by Microsoft! Something to also keep in mind when running your workloads on-prem is that it is reccomended that you leverage the latest Semi-Annual Channel release for Windows Server as this release has the latest container bits.

So how can we run Ghost on a Docker Host? Well assuming the Docker tools are already installed on Windows you would need to ensure you first switch to Linux Container mode. Once in this mode you can execute the following command from a prompt.

$ docker run -d -p 3001:2368 ghost:2

Tip - Windows 10 now supports running Linux containers "natively" using LCOW without switching modes.

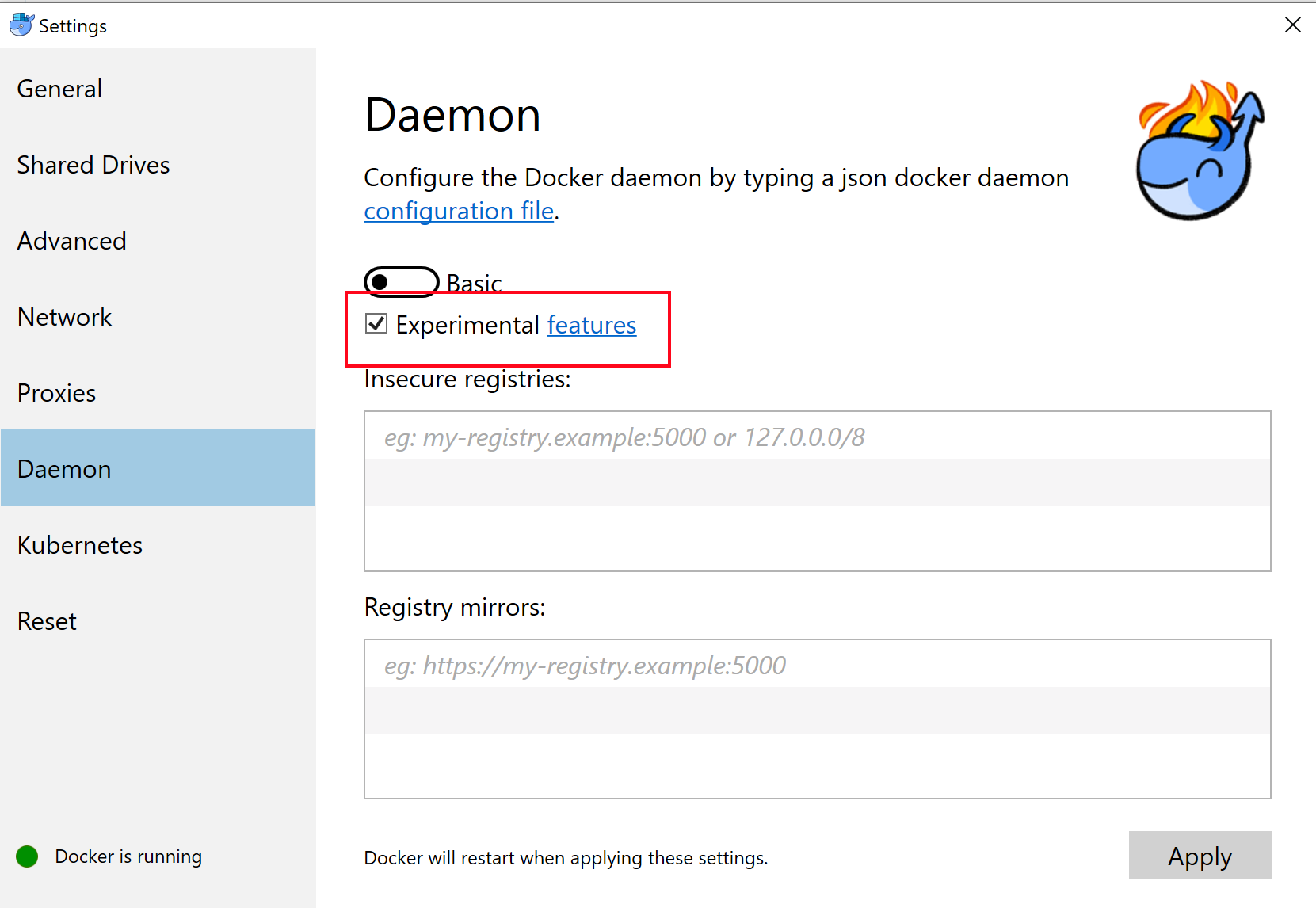

At this point in time this is an Experimental feature therefore we need to enable this option in our Docker Tools for Windows settings.

Now our Docker command would look something like the following

$ docker run --platform linux -d -p 3001:2368 ghost:2

We can also mount a directory on the Docker Host as a volume in our running container for our Ghost content(Database, Images etc), we can mount this volume at the same location where Ghost stores content by default i.e. "/var/lib/ghost/content". This requires no configuration changes, the other option is to mount it elsewhere and set the database location using Environment Variables.

The Docker command is as follows

$ docker run --platform linux -v c:/temp/content:/var/lib/ghost/content -d -p 3001:2368 ghost:2

Ok now we are armed with the basic knowledge for running a instance of Ghost on our Docker host lets move on!

Monitoring Ghost with Azure App Insights

App Insights is an awesome tool for instrumenting and monitoring your applications. The Official Ghost codebase does not include App Insight support out of the box, I also did not want to fork the codebase and maintain my own version of the code and Docker image. Therefore I came up with an alternate solution to achieve what I want by leveraging the fact that Docker images are layered.

- I use the Ghost V2 alpine image as a base image

- I install the application insights npm dependency

- As the code is all JavaScript I can insert a single line of JS which bootstraps App Insights at application startup.

My final DOCKERFILE looks as follows:

FROM ghost:2-alpine

# Add app-insights globally

RUN npm install -g applicationinsights

# Link app insights to avoid rebuild/validate projects npm package tree

RUN cd current && npm link applicationinsights

# Modify Ghost to Start monitoring set ikey via Env Vars

# ENV APPINSIGHTS_INSTRUMENTATIONKEY xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

RUN sed -i.bak -e "/var startTime = Date.now(),/a appInsights = require('applicationinsights'), " \

-e "/ghost, express, common, urlService, parentApp;/a appInsights.setup().start();" current/index.js

I have created a GitHub repro to track any changes, I will also use the repro in the next step when automating my Docker image builds.

Automating custom image build with Azure Container Registry and Build Tasks

I want to rebuild my Docker image every time the base image changes, this will ensure that my custom image always has the latest 2.X version of Ghost. I could execute the Docker build and push manually on my Docker host but instead I want to automate this process using Azure Container Registry and a Container Build Task. Using the Azure CLI you can execute the following command to create the build task on an existing ACR registry.

$ az acr task create \

--registry [ACR_REGISTRY_NAME] \

--name docker-ghost-ai \

--image ghost:2-alpine-ai \

--context https://github.com/keyoke/docker-ghost-ai.git \

--branch master \

--file 2/alpine/ai/DOCKERFILE \

--git-access-token [GIT_ACCESS_TOKEN]

We can manually run this new task at any time using the following command

$ az acr task run --registry [ACR_REGISTRY_NAME] --name docker-ghost-ai

And we can get the history of the runs using the following command

$ az acr task list-runs --registry [ACR_REGISTRY_NAME] --output table

The image will also be rebuilt every time a change is detected in the base image or the GitHub Repro - this is exactly what I want here, I also use the same Docker image tag which will mean there will be no manual interaction for switching to the new image when we are hosting on Azure App Service for example.

Hosting our custom image with Azure Web Apps for Containers

Azure App Service is an extremely useful PaaS offering which allows us to host our web applications on a managed platform(Windows or Linux).

There are various application deployment options on Azure App Service the one which I have chosen is Containers.

In my case I am using Azure App Service for Linux as I want to run Linux based container images but there is also support for Windows containers on Azure App Service for Windows.

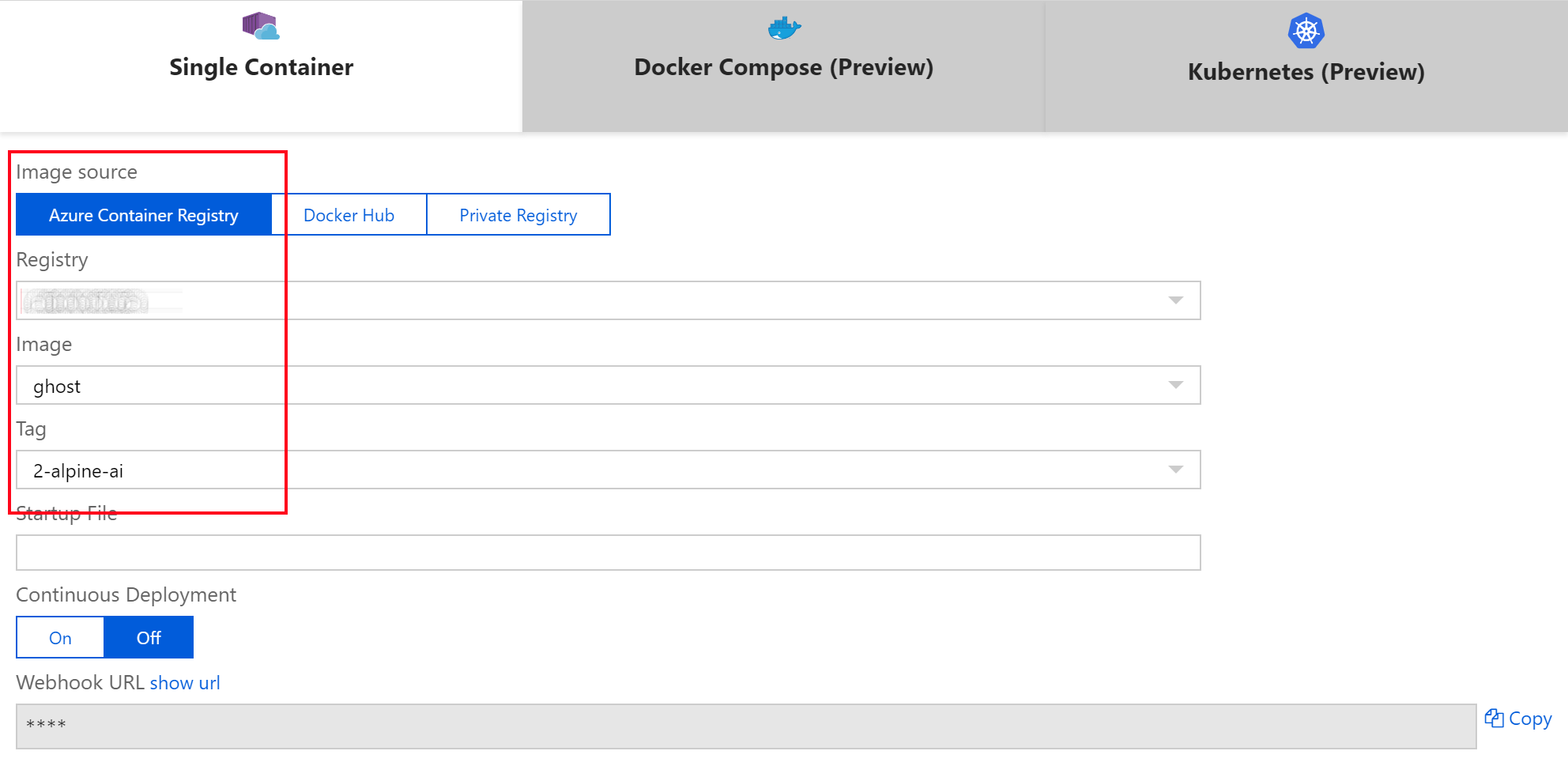

We have the option to either run the Ghost image ghost:2-alpine directly from the public Docker Hub registry or as in my case I will run my custom image from my private Azure Container Registry.

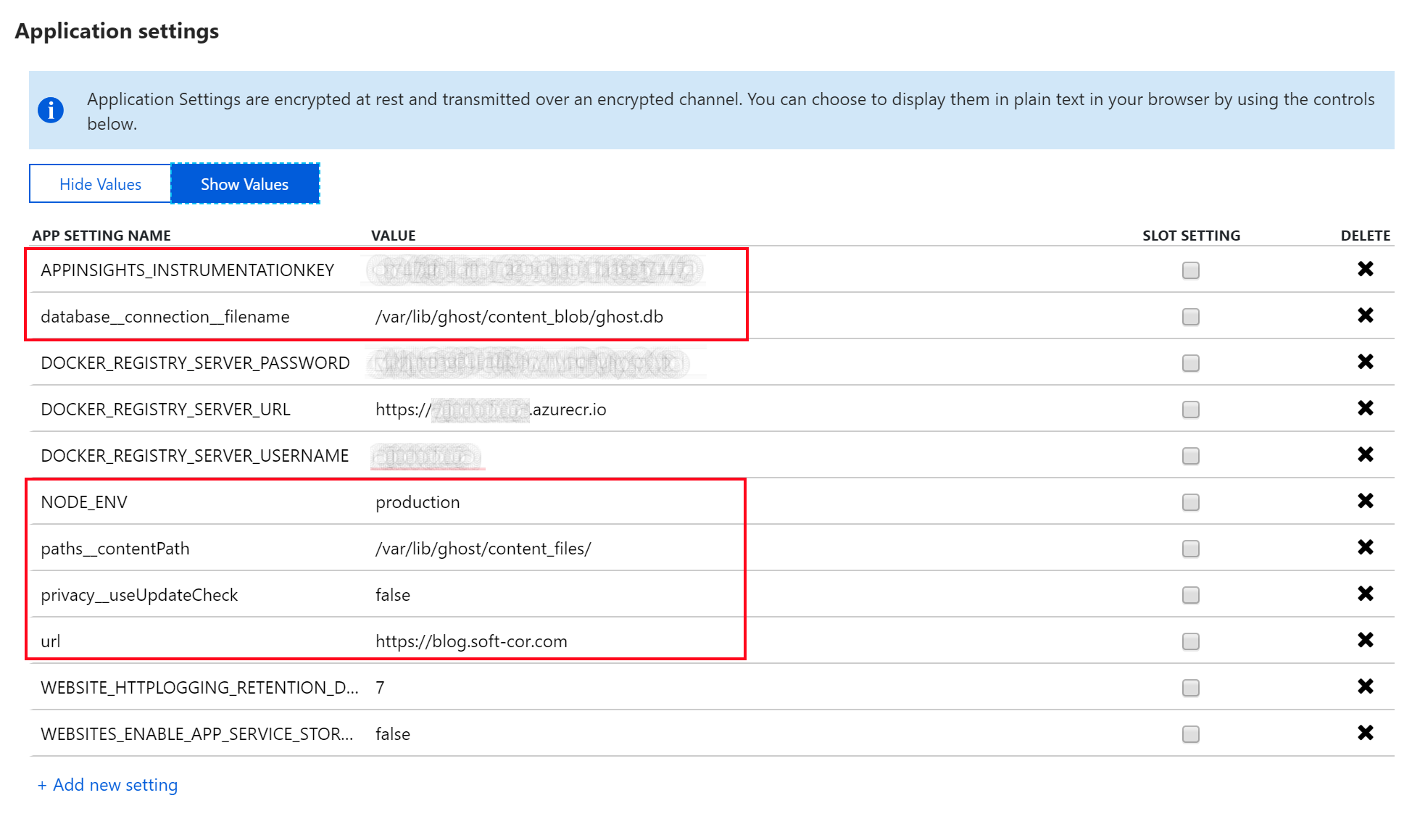

When running the Ghost Docker image you can easily inject your configuration using Environment Variables, this can be achieved by adding the relevant configuration items to the Azure App Service application settings UI in the Azure Portal.

As you can see I am providing the following configuration:

- Azure App Insights Instrumentation Key

- Content Path

- Blog Url

- Disabling Ghost Update check

- Database path and file name

- Ensure we are running in production mode(Default)

Storing Ghost data on Azure Storage(Blobs/Files)

I tried running using the WEBSITES_ENABLE_APP_SERVICE_STORAGE option which tells Azure App Service to share the /home/ directory across running instances and ensures this storage is persisted across container restarts. Unfortunately I had number of issues running Ghost in this configuration

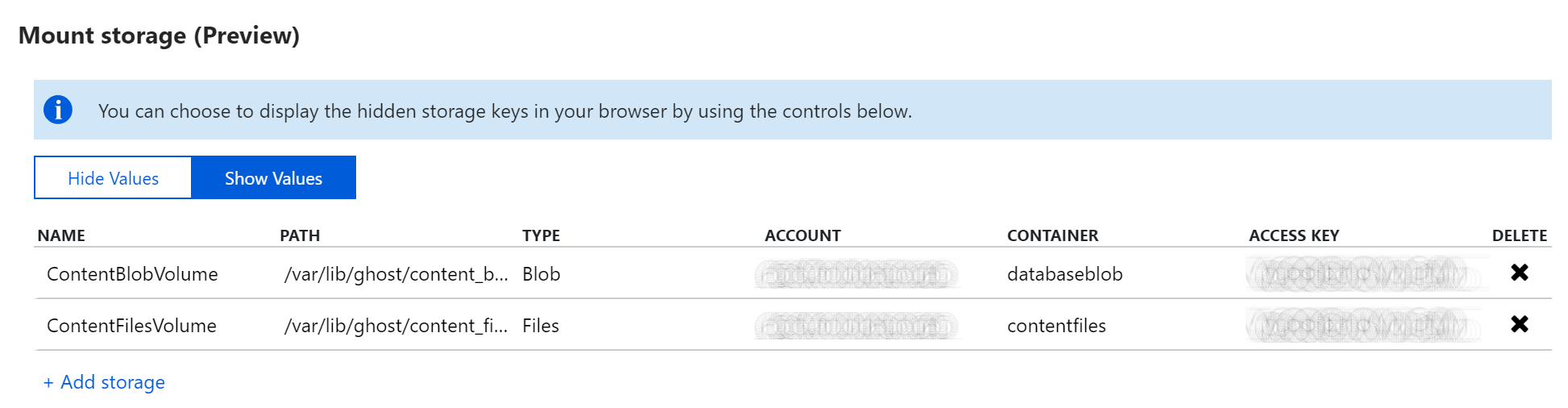

therefore I decided to use Azure App Service's preview support for mounting Azure Storage Account(Blobs/Files) as a volume for our running containers.

I also had issues when I attempted to put all data on either File Storage or all data on Blob storage this was specifically related to the sqlite database file and how locking works and how sym linking works for the content files. I didnt investigate these issues further but came up with the following working configuration :

- Azure Blob Storage for the sqlite database

- Azure File Storage for content(Images, Plugins, Logs, Themes etc)

The following commands will add the relevant volumes from the Azure CLI.

$ az webapp config storage-account add -g gesoftcorblog -n gesoftcorblog --custom-id ContentBlobVolume --storage-type AzureBlob --account

-name [Storage Account Name] --share-name databaseblob --access-key [KEY] --mo

unt-path /var/lib/ghost/content_blob

{

"ContentBlobVolume": {

"accessKey": "[Storage Account Key]",

"accountName": "[Storage Account Name]",

"mountPath": "/var/lib/ghost/content_blob",

"shareName": "databaseblob",

"state": "Ok",

"type": "AzureBlob"

}}

$ az webapp config storage-account add -g gesoftcorblog -n gesoftcorblog --custom-id ContentFilesVolume --storage-type AzureFiles --accou

nt-name [Storage Account Name] --share-name contentfiles --access-key [Storage Account Key] --

mount-path /var/lib/ghost/content_files

{

"ContentBlobVolume": {

"accessKey": "[Storage Account Key]",

"accountName": "[Storage Account Name]",

"mountPath": "/var/lib/ghost/content_blob",

"shareName": "databaseblob",

"state": "Ok",

"type": "AzureBlob"

},

"ContentFilesVolume": {

"accessKey": "[Storage Account Key]",

"accountName": "[Storage Account Name]",

"mountPath": "/var/lib/ghost/content_files",

"shareName": "contentfiles",

"state": "Ok",

"type": "AzureFiles"

}

}

The Azure team have also recently added the ability to manage mounted storage via the Azure App Service application settings UI in the Azure portal.

We can see the mount points which we have added previously via the Azure CLI, In the previous section we show which configuration items must be set to ensure these new mount points are used by Ghost.

Simple Ghost backup with Azure Functions

To ensure some continuity after outages or data loss I built a simple Azure Function which I schedule on a weekly basis which triggers a backup of the Ghost data. It's very simple as it leverages built-in functionality in Ghost aswell as in Azure Storage. The code performs the following:

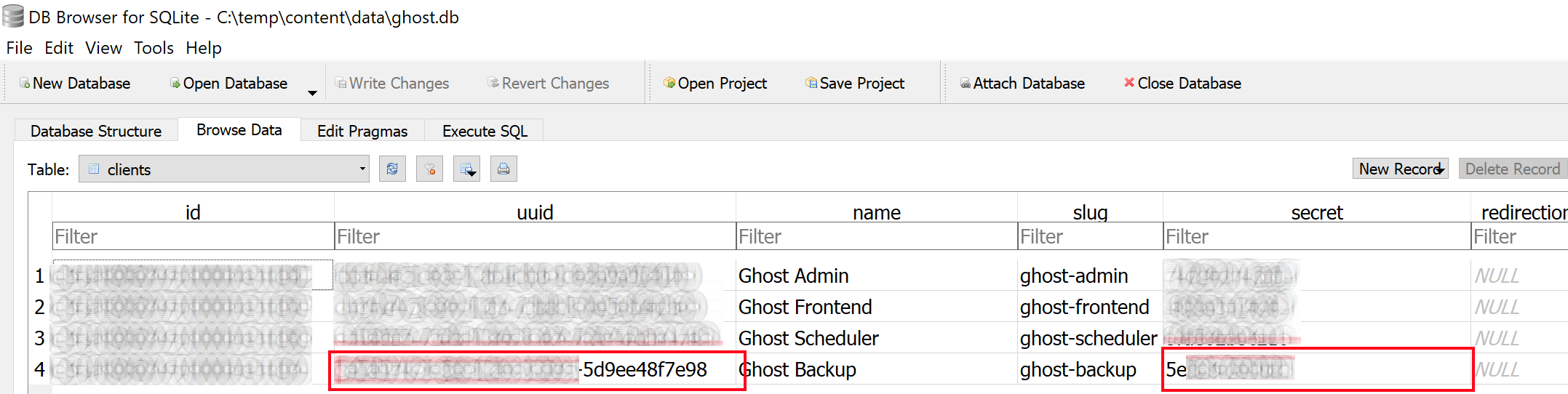

- Export sqlite database to JSON using the built-in API "/ghost/api/v0.1/db/backup?client_id=[CLIENT ID]&client_secret=[CLIENT SECRET]", the exported file is placed within the data directory in the content root. You can use the client id and secret for the "Ghost Backup" client. You can get these values by opening your sqlite database and browsing the clients table data as shown below.

- Using the Azure Storage API's I create a Read-Only snapshot of the Azure File Share where I am storing my Ghost data.

See the code below, I have GitHub repro which also tracks my changes.

using Microsoft.Azure.WebJobs;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.Logging;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.File;

using Newtonsoft.Json.Linq;

using System;

using System.Net.Http;

using System.Threading.Tasks;

namespace SimpleGhostBackup

{

public static class SimpleGhostBackup

{

[FunctionName("SimpleGhostBackup")]

public async static Task Run([TimerTrigger("0 0 0 * * Sun")]TimerInfo myTimer, ILogger log, ExecutionContext context)

{

log.LogInformation($"SimpleGhostBackup function started execution at: {DateTime.Now}");

var config = new ConfigurationBuilder()

.SetBasePath(context.FunctionAppDirectory)

.AddJsonFile("local.settings.json", optional: true, reloadOnChange: true)

.AddEnvironmentVariables()

.Build();

var clientId = config["ClientId"];

var clientSecret = config["ClientSecret"];

var blogUrl = config["BlogUrl"];

var storageShareName = config["StorageShareName"];

var storageConnection = config["StorageConnectionString"];

var client = new HttpClient()

{

BaseAddress = new Uri(String.Format("https://{0}", blogUrl))

};

log.LogInformation($"Requesting Ghost Backup");

var response = await client.PostAsync(String.Format("/ghost/api/v0.1/db/backup?client_id={0}&client_secret={1}", clientId, clientSecret), null);

if (response.StatusCode == System.Net.HttpStatusCode.OK)

{

// Get our response content which contains the created backup file name

var content = await response.Content.ReadAsStringAsync();

var json = JObject.Parse(content);

// Connect to our Azure Storage Account

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(storageConnection);

CloudFileClient fileClient = storageAccount.CreateCloudFileClient();

CloudFileShare share = fileClient.GetShareReference(storageShareName);

CloudFileDirectory root = share.GetRootDirectoryReference();

CloudFileDirectory data = root.GetDirectoryReference("data");

//Does the data folder exist

if (await data.ExistsAsync())

{

log.LogInformation($"Data folder exists.");

// get the backup file name

var filename = System.IO.Path.GetFileName((string)json["db"][0]["filename"]);

CloudFile file = data.GetFileReference(filename);

//Confirm that the backup file exists

if (await file.ExistsAsync())

{

// Create the snapshotg of the file share

log.LogInformation($"Backup file created - {0}", filename);

log.LogInformation($"Creating Azure Fileshare Snapshot");

await share.SnapshotAsync();

}

}

}

log.LogInformation($"SimpleGhostBackup function ended execution at: {DateTime.Now}");

}

}

}

The function can be published using the Azure Function Core Tools or directly from the UI in Visual Studio 2017 15.9.

We will now be able to restore our blog in the event of any data loss, this concludes the steps which I performed to setup and run my private Ghost blog on Azure. Feel free to provide your feedback or questions via the comments section below.

Issues

Error: SQLITE_CORRUPT: database disk image is malformed

After I had my blog up and running for a few days I noticed the site became unavailable and was logging this exception to the application logs. I did some research and it appears this can happen if the DB file is not closed cleanly - sqliteexception.

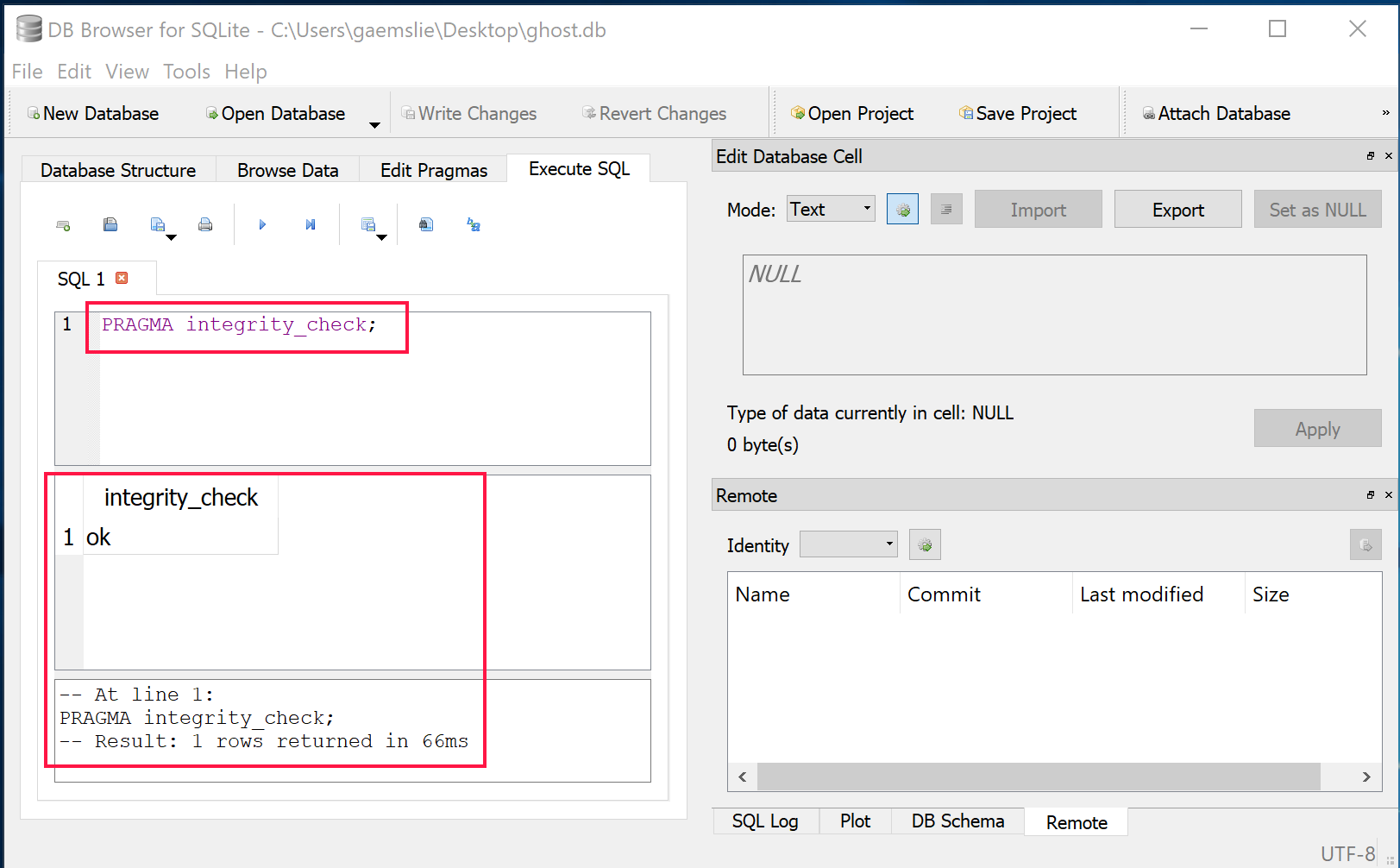

I downloaded the ghost.db file from my Azure Storage account and opened it in DB Browser SQLite tool.

I then ran the following SQL command to verify db integrity

PRAGMA integrity_check;

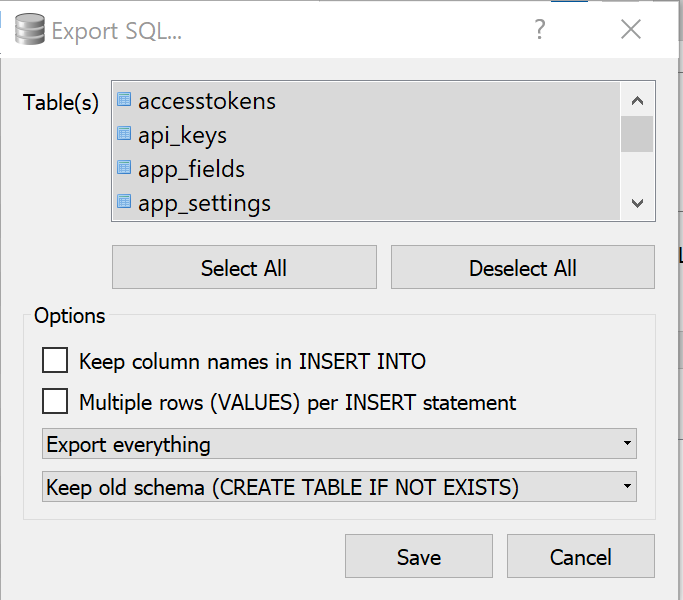

The db integrity seems to be intact, therefore I tried the next step which is typically reccomended to fix this type of issue. Which is to export entire db to sql and re-import into the database - File -> Export -> Database to SQL file.

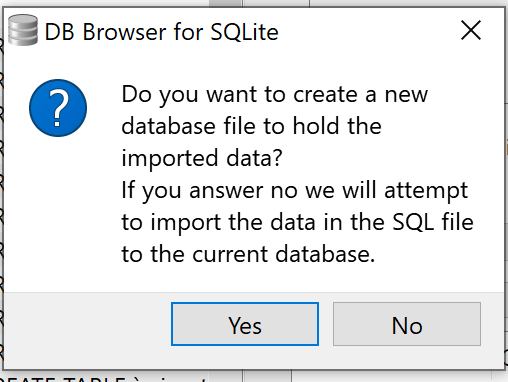

Then Re-import to a new database with File -> Import - Database from SQL file

Replace the original db file with the newly created one.

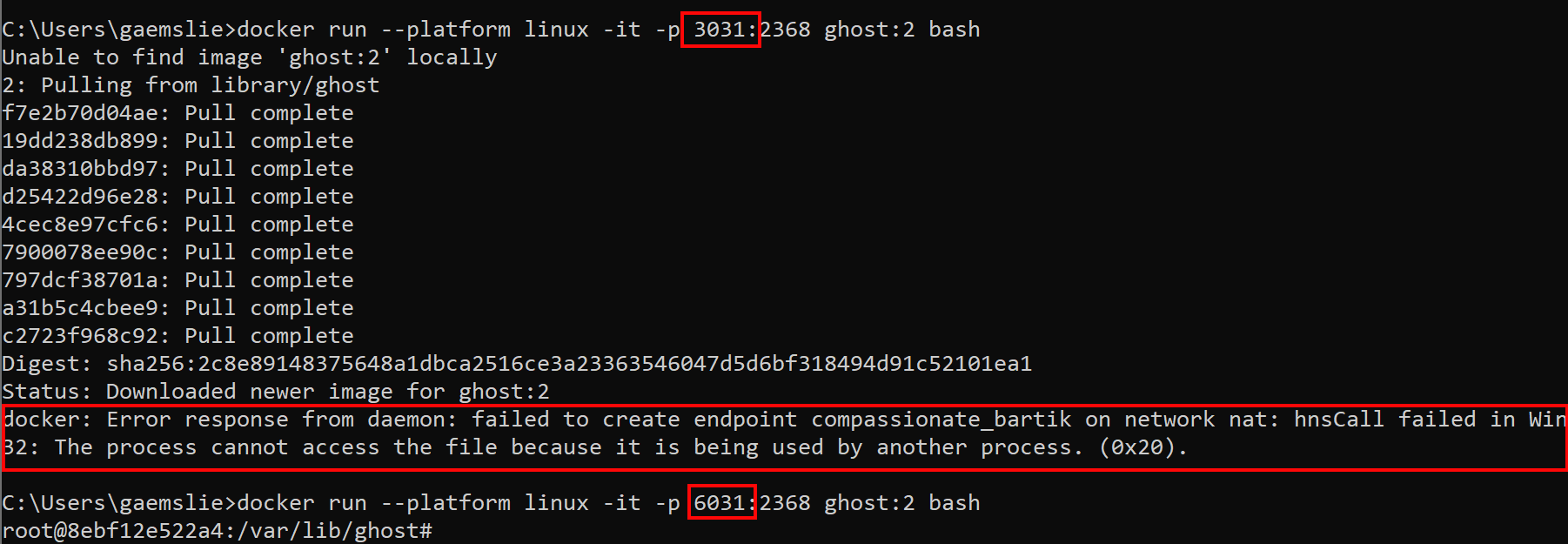

ERROR: Error response from daemon: failed to create endpoint [container name] on network nat: hnsCall failed in Win32: The process cannot access the file because it is being used by another process. (0x20).

All of a sudden I have been receiving this error when running the ghost container locally on my developer machine, the same command worked before.

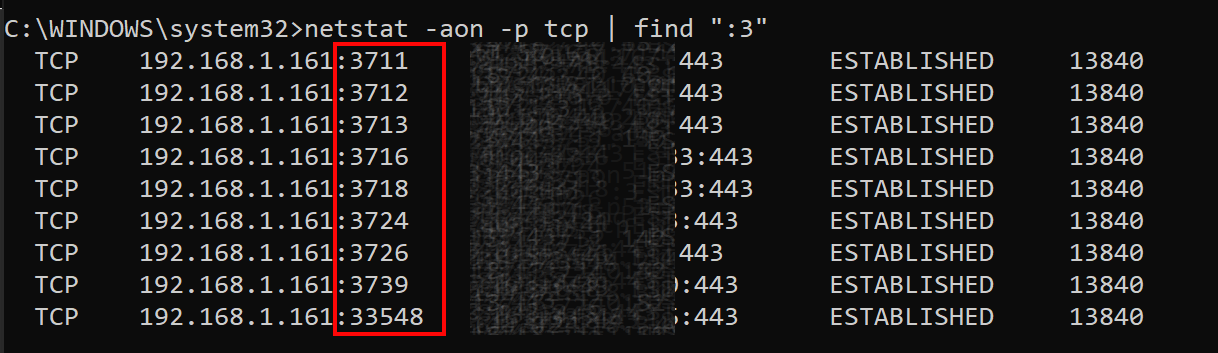

Based on what I have read a common cause for this error is using a port which is already in use. But if I check to see what process is listening on this port I dont find any processes listening on this port.

To fix this issue I need to provide a port > 3550, so something like the following works fine.

$ docker run --platform linux -v c:/temp/content:/var/lib/ghost/content -d -p 3550:2368 ghost:2

I did not have time to investigate further, it seems likely there have been changes in Docker for Windows or in Windows itself.

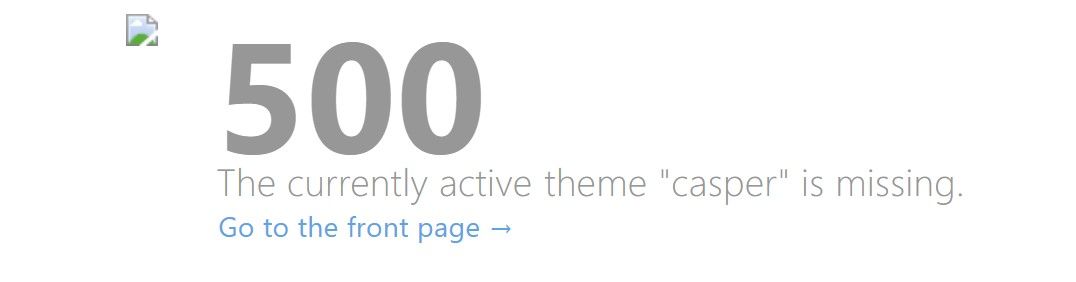

Error: The currently active theme "casper" is missing

After following the steps outlined in this blog post you may see the following error displayed when navigating to your new Ghost blog.

This is due to the way that the Ghost Docker image is bootstrapping the node application on container startup & how we are overriding the content paths using the Ghost config environment overrides.

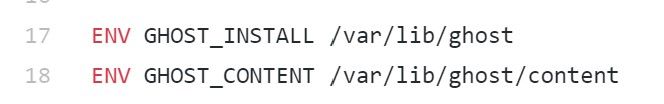

The Dockerfile defines two default environment variables

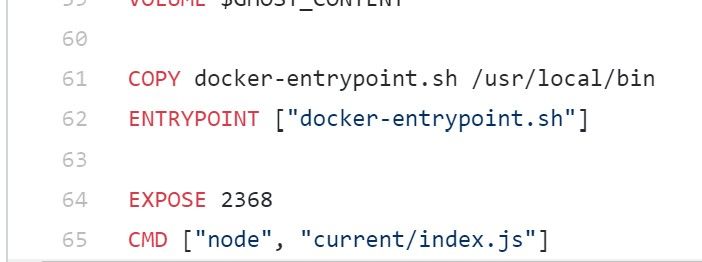

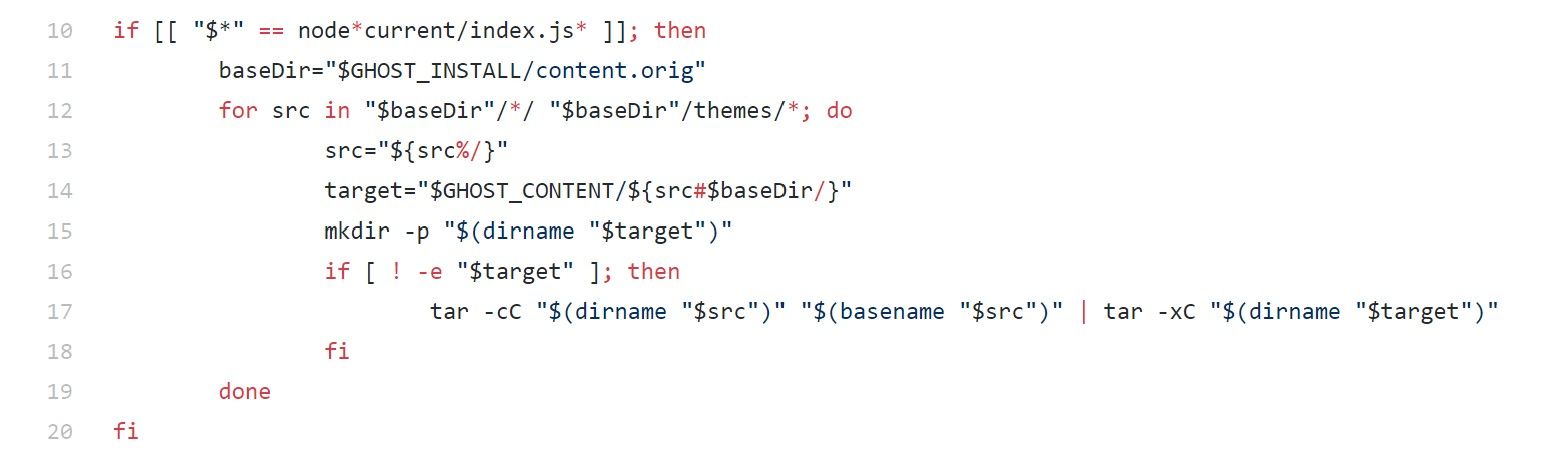

And the entrypoint for our container is a bootstrap shell script

One of the things the shell script does is create the default content directory structure and place the default theme casper within the themes folder.

To do this it uses the GHOST_CONTENT environment variable as target directory and at this point our nodejs application has yet to boot and therefore this environment variable is still set to "/var/lib/ghost/content". We are overriding this default path with the Ghost config "paths__contentPath" but my understanding is that this value has not yet taken affect at this stage of the container boot up.

We have two options to resolve this:

- Go to your https://[your web app].azurewebsites.net/ghost, complete the blog setup and manually upload the casper theme. Download the casper release which matches your Ghost version.

- Create a new configuration setting for your web app which overrides the default for GHOST_CONTENT set it to the same value as the paths__contentPath. Once Ghost boots successfully the first time you can then delete this setting.

In the future I may investigate this issue further but for now this will get you started.