Options for storing Azure Pipelines YAML files

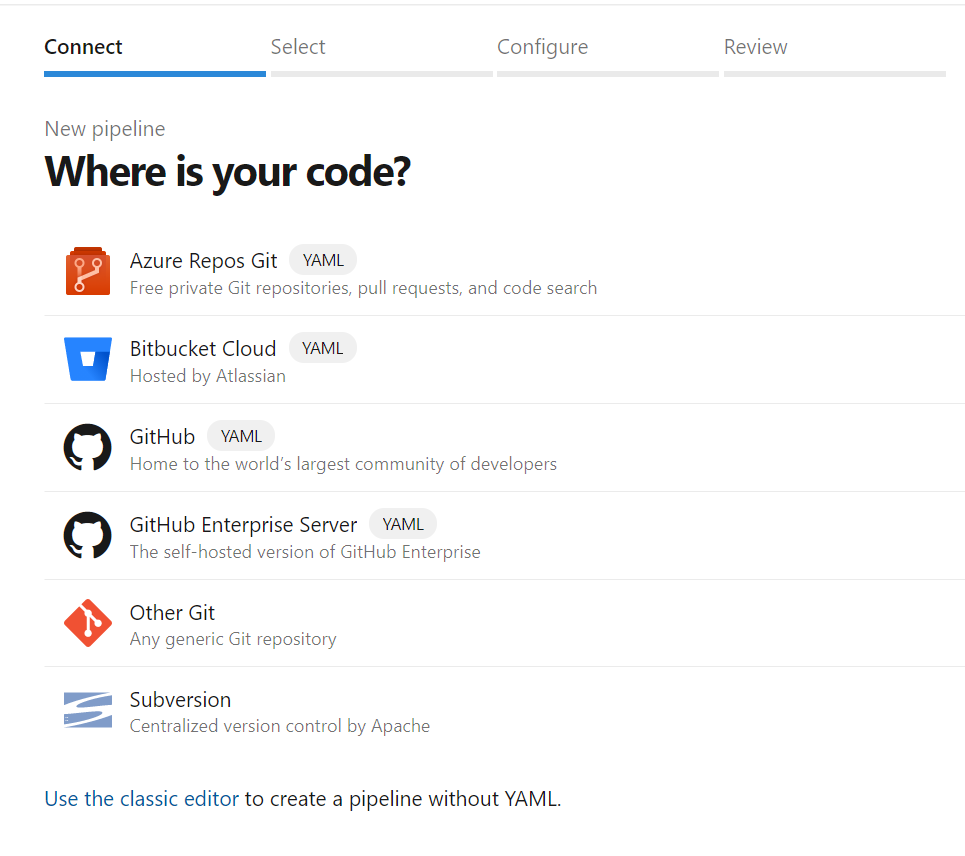

So where can we store our YAML Pipeline files? If we take a look at the Azure Pipeline creation experience we are provided a number of options which can be seen below.

This blog post is part of a series of posts covering the Challenges adopting the Azure DevOps SaaS offering in highly regulated industries. The first post covered the connectivity challenges experienced by some the enterprises which I have worked with. In subsequent posts we then went on to explore some of the options for addressing these challenges, if you missed them feel free to check them out :

- Challenges adopting the Azure DevOps SaaS offering in highly regulated industries - Connectivity

- Triggering Azure Pipelines with an offline source repository

- Triggering Azure Pipelines with an offline artefact repository

Firstly lets take a look at the options for running CI/CD with Azure DevOps(including Azure DevOps Server the on-prem offering), there are essentially 3 possibilities when leveraging Azure Pipelines.

- Classic Pipelines, leveraged for Continuous Integration and originally called Team Foundation Build, has a visual designer and stores the pipeline definitions in a database.

- Release Pipelines, is essentially what was called Visual Studio Release Management in the boxed version of the product and provided Continuous Deployment functionality. Like Team Foundation Build the pipeline definitions are essentially stored in a database and are designed using the UI.

- YAML Pipelines, which is the YAML based modern approach to building pipelines where the pipeline definitions are stored alongside the source code in a source control repository rather than in a database. The Azure DevOps UI provides a very light weight editor for creating and managing these pipelines.

I would recommend YAML Pipelines for all new CI/CD workloads & I would also certainly start looking at moving any classic or release pipelines over when possible.

So where can we store our YAML Pipeline files? If we take a look at the Azure Pipeline creation experience we are provided a number of options which can be seen below.

As our pipeline definition is stored alongside our code it makes sense that we can store these files in any of the version control systems which are supported by Azure DevOps.

This is great! so what's the challenge then? Considering we are leveraging a SaaS offering and if for arguments sake we were in a highly regulated industry and were leveraging GitHub Enterprise on-premise for storing application source code - Naturally you would also want to store your pipeline YAML files on-premise in your GitHub Repository.

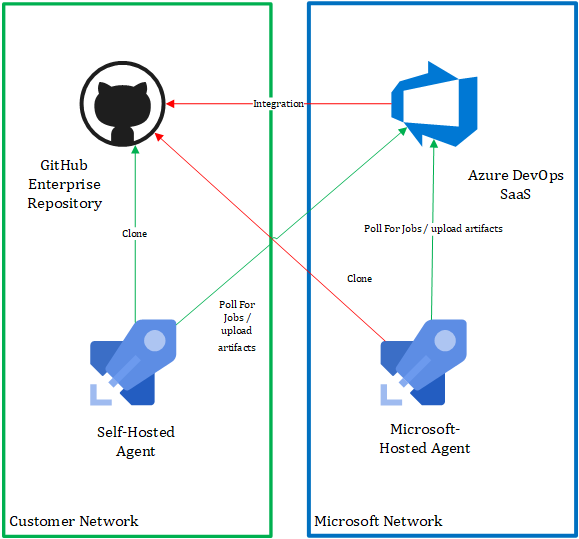

Could you do it? Well yes of course... but it depends, A key point here is that for Azure DevOps to materialize a pipeline based on the YAML file stored in your source control repository it requires line of sight to this resource from a networking perspective. The diagram below shows some of the communication which is occurring between Azure DevOps and GitHub Enterprise Server, in our scenario the important connectivity is labelled "Integration".

As you can see this network traffic originates from a Microsoft Network, In my experience highly regulated enterprises typically do not feel comfortable making changes to their firewalls to allow inbound traffic originating from outside their network.

The key issue being that we would need to whitelist an entire Azure region's IP address space, and up to now we have not had fine grained controls for allowing this traffic. Although the Azure DevOps team are currently working on making this easier by introducing Azure Service Tags for traffic originating from Azure DevOps Scale Units.

In the meantime what other options do we have? Well a simple solution is to store our YAML files separately from our application source code for example in a Azure Repo which is visible to the Azure DevOps Service.

Finally we always have the option of running Azure DevOps Server within our on-premise network - but this wouldn't be my first choice.